1. 分析 Fluid 挂载 NFS 存储

- 查看 Fuse Pod

| |

在启动之后,Fuse 会将存储目录挂载到 Node 上; 在停止之前,卸载存储目录。

- 查看 Fluid 注入的配置文件

| |

| |

Fluid 会将 Dataset 中的 mountPoint 配置通过 Json 文件挂载注入到 Fuse 中。

- 查看 Fuse 的启动脚本

| |

| |

Fuse Pod 启动时,会解析 config.json 文件,生成 mount-nfs.sh 脚本,并执行。

2. 打包 Fluid Lustre Runtime 镜像

从上面的分析,我们看到对于这种 mount 挂载类型的文件存储服务,只需要打包一个对应的 Fuse 镜像即可接入 Fluid 进行管理。

- 创建 fluid_config_init.py 脚本

| |

只需调整一下 mount 命令即可。

- 创建启动脚本 entrypoint.sh

| |

- 创建 Dockerfile 打包镜像

| |

编译镜像并推送镜像

| |

3. Lustre 接入 Fluid

- 创建 Dataset

| |

注意这里的 mountPoint,如果需要挂载子目录 subdir,请提前创建。在生产过程中,多个 PVC 可能会共用一个 Lustre 后端。

子目录挂载的格式为: fs-x.fsx.us-west-2.amazonaws.com@tcp:/x/subdir

- 创建 Runtime

| |

| |

- 创建 Pod

| |

4. 性能测试

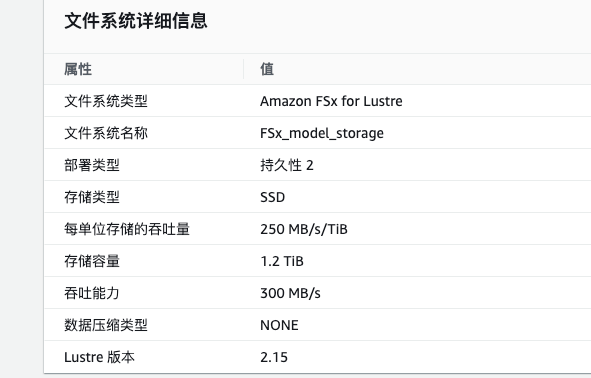

下图是在 AWS 上申请的 FSx for Lustre 规格。

4.1 直接挂载在主机上顺序读测试

- 安装 lustre-client

| |

注意需要执行 sudo reboot 重启下机器。

参考文档 https://docs.aws.amazon.com/zh_cn/fsx/latest/LustreGuide/install-lustre-client.html

- 执行测试

| |

4.2 在 Pod 中顺序读测试

- 进入 Pod

| |

- 执行测试

| |

Fluid 中,ThinRuntime 的 PVC 性能与主机直接挂载的性能,不会损失很多。这里需要注意,blocksize 和 size 的大小会严重影响测试的结果。如果只读取 1g 的数据,顺序读的性能可以达到 500+ MB/s; 如果 blocksize 为 128k,顺序读的性能又只有 100+ MB/s。因此,需要根据使用场景进行调整,才能准确评估。

5. 总结

最近国内的模型推理服务需要在海外进行部署,我们选定了 AWS FSx for Lustre 作为存储后端,但为了保持业务层使用存储逻辑的一致性,需要将 Lustre 对接到 Fluid 中。

国内的模型上传到 S3 之后,自动同步到 Lustre 中。

Fluid 早期版本就支持 Lustre,但 Fluid 社区中并没有提供详细的文档描述和 Demo 示例,因此本篇主要记录了使用 Fluid 的 ThinRuntime 对接 Lustre 的实践过程。

由于我们仅用来存储推理模型,模型数据通常都是大文件,因此在性能测试方面仅测试了顺序读的速度。在我们选的规格下,PVC 中的速度能达到 300+ MB/s。